A Guide to No Code Web Scraping

Picture this: you need to grab competitor pricing, find new sales leads, or track market trends from a dozen different websites. A few years ago, that meant hiring a developer to write custom scripts—a slow and expensive process. Today, you can do it all yourself, without touching a single line of code. This is the reality of no code web scraping. It’s a complete game-changer, using visual tools to let anyone—not just programmers—pull valuable data from the web.

Unlock Web Data Without Writing Code

For a long time, web data extraction was stuck behind a technical wall. If you couldn't code in languages like Python, you were out of luck. This left marketers, founders, and researchers dependent on others for the data they desperately needed to make smart decisions.

No-code tools completely tear down that wall. Instead of writing complex instructions, you use a simple point-and-click interface. It’s almost like highlighting text in a document, but on a massive scale. You just show the tool what you want—a product name, a price, a contact email—and it intelligently finds and extracts similar data across hundreds or even thousands of pages automatically.

The Shift From Code to Clicks

This move away from code isn't just a passing fad; it's a fundamental change in how we access and work with information. Traditional web scraping is powerful, but it's also brittle. It requires constant upkeep from a developer, and even a small change to a website's layout can break the entire script, causing delays and adding costs.

A no-code tool like the Ultimate Web Scraper, on the other hand, puts you in the driver's seat. If a site changes, you can adjust your scraper in minutes, not days. That kind of agility gives you a serious edge.

The global web scraping market is on a steep climb, projected to nearly double from $1.03 billion in 2025 to roughly $2 billion by 2030. This isn't surprising when you consider that about 65% of enterprises worldwide already use data extraction tools. It's become a core business function. For more details, you can explore the full market report.

This explosive growth really highlights how critical accessible data has become for everything from lead generation and price monitoring to building new product features.

The difference between a visual, no-code approach and the old way of doing things is stark. Let's break it down.

No Code vs Traditional Web Scraping

| Feature | No Code Web Scraping | Traditional Web Scraping |

|---|---|---|

| Skill Level | Beginner-friendly, no programming needed | Requires expertise in languages like Python/JavaScript |

| Setup Speed | Minutes to hours | Days to weeks |

| Cost | Low monthly subscription | High development and maintenance costs |

| Maintenance | User can easily update for site changes | Requires a developer to fix broken scripts |

| Flexibility | Great for common tasks, might struggle with very complex sites | Highly customizable for any scenario |

| Best For | Marketers, sales teams, researchers, entrepreneurs | Data scientists, engineers, complex/large-scale projects |

While traditional scraping still has its place for highly complex jobs, no-code tools cover the vast majority of business needs with far less friction.

Why Businesses Are Embracing No-Code Tools

The reasons for this shift are practical and immediate. The benefits ripple across the entire organization, from the marketing team to the bottom line.

- Massive Cost Savings: You completely sidestep the need to hire or contract expensive developers for scraping projects.

- Incredible Speed: A data extraction task that might have taken weeks to scope and build can now be up and running in a single afternoon. You can go from an idea to a dataset in just a few hours.

- Empowerment for Non-Technical Teams: This is huge. Marketing, sales, and product managers can finally gather their own intelligence without adding to the engineering team's backlog.

Ready to see how it works? You can get started right away by downloading our Chrome extension. In the next few sections, I'll walk you through the exact steps to pull your first set of valuable data.

Let's Run Your First Scraping Project

Theory is one thing, but actually getting your hands on clean, structured data is where the real fun begins. Let’s walk through a no-code web scraping project together, right now. We'll tackle a classic scenario: pulling product details from an e-commerce site. My goal here is to show you just how quickly you can get a real result and build your confidence.

First things first, you'll need the right tool for the job. We’ll be using the PandaExtract - Ultimate Web Scraper, which you can add directly to your browser from the Chrome Web Store. It’s a simple but surprisingly powerful extension that gives you a point-and-click interface for any website.

Quick Tip: Before we dive in, take a second to download our free Chrome extension. Having it installed will let you follow along with these steps in real-time and make the whole process click.

Once the extension is installed, you’re all set to pull your first set of data. The whole experience is designed to be visual and intuitive, so you don't have to guess what's happening.

Your First Point-and-Click Extraction

Go ahead and navigate to any e-commerce category page—maybe a page full of laptops, sneakers, or books. Now, open the PandaExtract extension. Instead of digging into the website’s code to find the data, you just point at what you want.

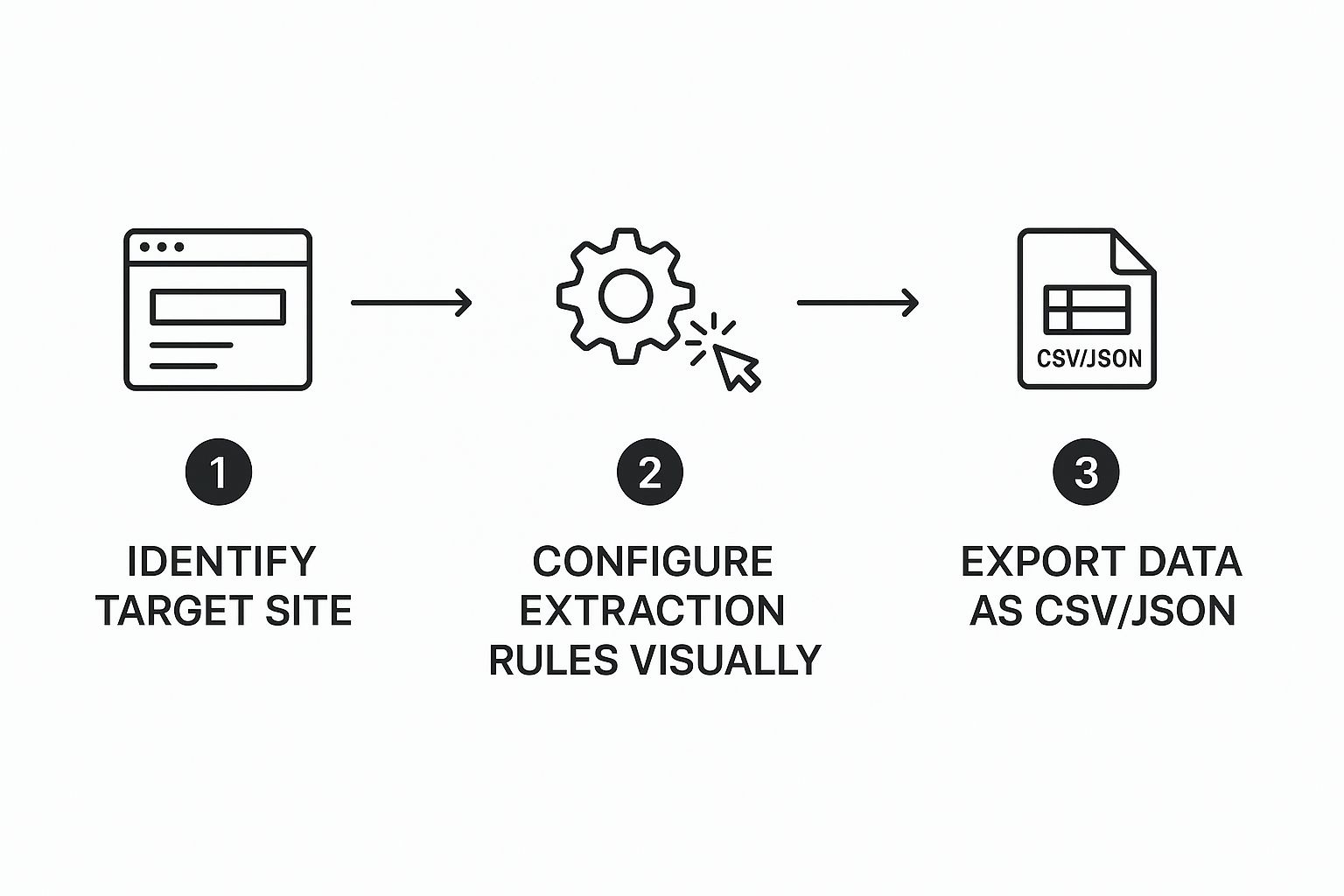

It's that simple. Here's how it works:

- Select a Product Title: Click on the name of the first product. You'll see the tool’s intelligent selection instantly highlight all the other product titles on the page. It just knows.

- Grab the Prices: Next, click on the price for that same product. Just like before, the tool recognizes the pattern and selects all the other prices automatically.

- Capture Reviews or Ratings: Do the same thing for the star rating or the review count. With every click, you’re essentially building a new column for your spreadsheet.

You've just trained the scraper on what to look for, and you haven't touched a single line of code.

This same point-and-click approach works for almost any kind of data. For instance, once you get the hang of this, you could easily figure out how to scrape reviews from Google Maps without code using the exact same principles.

Preview and Export Your Data

As you’re clicking on different elements, you’ll notice the extension’s preview window filling up in real-time. It shows you a clean, structured table of your results as you build them. You can literally watch the columns for "Product Title," "Price," and "Reviews" populate.

This instant feedback is one of my favorite features. It lets you confirm you’re grabbing the right information before you commit to the full extraction. No more running a scraper for ten minutes only to find you targeted the wrong thing!

Once you’re happy with how the preview looks, just hit the export button. You can save your freshly scraped data as a CSV or Excel file, ready to be dropped into Google Sheets or analyzed in Microsoft Excel.

In just a few minutes, you’ve turned a messy webpage into a perfectly organized dataset. That's the power of no-code web scraping.

Scaling Up with Bulk Data Extraction

Pulling data from a single page is useful, but the real magic happens when you scale up. You unlock the true power of no-code web scraping when you can automate data collection across dozens, or even hundreds, of pages at once. This is where bulk extraction turns a small data sample into a game-changing dataset.

Think about analyzing a real estate market. One page of listings gives you a tiny snapshot. But scraping all 50 pages of search results? That gives you a panoramic view of the entire inventory. Manually copying and pasting that much information would take days and be riddled with mistakes. With the right approach, you can automate the whole thing in minutes.

The secret to bulk extraction is teaching the tool how to get from one page to the next. This is a common web scraping task known as handling pagination. Most sites use simple "Next" buttons or page numbers to break up long lists, and a good no-code scraper can be told to click them automatically.

Configuring Pagination for Automated Scraping

Setting up pagination is more intuitive than it sounds. After you’ve told PandaExtract what to grab on the first page—let's say property price, address, and square footage—your only remaining job is to show it how to get to the next page of results.

You simply find the "Next Page" button or the page number links at the bottom of the list. Inside the PandaExtract extension, you just click on that element and designate it as the pagination trigger. Once you do that, the tool will extract the data on the current page, click "Next," and repeat the process until it runs out of pages.

This is a core technique for gathering large amounts of data for any project, from market research to building lead lists. For instance, the same idea applies if you want to learn how to scrape Twitter followers without code, though you’d be dealing with infinite scroll instead of a "Next" button.

The web scraping software market was valued at an estimated $782.5 million in 2025 and is projected to explode to over $3.52 billion by 2037. This growth is fueled by industries like real estate, where agencies constantly scrape Multiple Listing Services (MLS) to keep their own property databases fresh.

This automated process is a massive time-saver, allowing you to build datasets that are practically impossible to create by hand. A task that might take a small team an entire week can be done before you've even finished your morning coffee.

Automating Data Collection on a Schedule

Pulling data in bulk is a huge time-saver, but the real power comes when you automate the entire process. Why manually run an extraction when you can have fresh data delivered to you on a silver platter, exactly when you need it? This is where scheduling comes in, turning a one-off task into a continuous, hands-free intelligence stream.

Think of it as putting your data gathering on autopilot. Instead of remembering to check a competitor's website for price changes, you can set up a "recipe" to do it for you every morning at 9 AM sharp. Or maybe you need to monitor product inventory on an e-commerce site every hour. This "set it and forget it" mindset is what transforms a simple scraper into a 24/7 data-gathering operation.

This kind of continuous monitoring is a massive advantage. In fact, the web scraping tools market is projected to reach about $2.83 billion by 2025. A big driver for that growth is the need for real-time information, especially in retail and e-commerce where pricing and availability change in a flash. Businesses rely on automation to keep up. You can see the data behind these market trends for yourself.

Setting Up Your First Automated Recipe

Getting your first scheduled task up and running is more straightforward than you might think. After you've taught the tool what data to grab from a specific page, you just hop over to the scheduling options.

With PandaExtract, for example, once your extraction rules are locked in, you can dictate exactly when that job should run. The options are pretty flexible, so you can tailor the schedule to your specific project.

- Daily Runs: Perfect for getting a morning briefing on market news or what your competitors are posting on social media.

- Hourly Checks: This is a must for tracking dynamic data like stock prices, flight deals, or limited-inventory products.

- Weekly Summaries: A great way to pull together performance metrics or generate a fresh list of sales leads for the week ahead.

By automating the process, you're not just saving time; you're also eliminating the chance of forgetting or making a mistake. It frees you up to do the important work—analyzing the data and making decisions—instead of getting bogged down in the collection itself.

You set it up once, and from then on, you can be confident that an updated dataset will be waiting for you. It’s a core feature built right into powerful no-code tools.

Ready to put your own data collection on autopilot? Download our Chrome extension and see just how easy it is to schedule your first extraction.

Making Your Exported Data Work for You

Alright, so you've collected a mountain of data. That's a great start, but it's just the beginning. The real magic happens when you turn that raw information into something you can actually use to make decisions. This is where the power of no-code web scraping really shines, closing the gap between data collection and getting things done.

After your scraper finishes its run, you’re left with a clean, structured dataset. The next step is getting it out of the tool and into a format that fits what you're trying to accomplish.

CSV vs. JSON: Picking the Right Format

This isn't just a technical choice—it directly impacts how quickly and easily you can work with your data. Most no-code tools, including PandaExtract, give you a few options, but the two you'll see most often are CSV and JSON.

CSV (Comma-Separated Values): Think of this as the universal language for spreadsheets. If you want to pop your data into Google Sheets or Microsoft Excel for analysis, this is your go-to. It’s perfect for creating charts, building pivot tables, or just sorting and filtering your results to find what you need.

JSON (JavaScript Object Notation): This one is for the developers or for anyone needing to feed data into another application. If your plan is to send scraped info to a CRM, a custom-built dashboard, or another piece of software through an API, JSON is what you want. It handles complex, nested data beautifully, making it super flexible for integrations.

The point is, modern no-code web scraping tools do more than just grab data. They deliver it in a way that's immediately useful for your specific goal, whether that’s in-depth analysis or seamless software integration.

For most marketers, researchers, and entrepreneurs I talk to, starting with a CSV file is the most direct route to getting value from their data.

Prepping Your Data for Analysis

Even the cleanest scrape might need a little tidying up before you dive in. This "data cleaning" step is crucial for making sure your insights are accurate. The good news is, because your export is already structured, this part is a breeze.

You might find yourself doing small tweaks in your spreadsheet, such as:

- Removing dollar signs or currency symbols from prices so you can run calculations.

- Standardizing state abbreviations (like changing "CA" to "California").

- Splitting a "Full Name" column into separate "First Name" and "Last Name" columns.

These are the kinds of quick fixes that make a huge difference. For instance, if you're building a targeted outreach list, having clean data is essential. If that’s your end goal, you can find some great ideas on how to supercharge your B2B lead generation with AI using scraped data as your starting point.

This is another area where PandaExtract helps. It lets you preview, filter, and even make quick edits right in its interface before you export anything. This saves a ton of time and helps ensure the file you download is ready to go.

Answering Your Questions About No-Code Web Scraping

As you get your hands dirty with no-code web scraping, you’re bound to have some questions. That's a good thing. Thinking through the details from the start helps you gather data responsibly and, frankly, get much better results.

Let’s tackle some of the most common things people ask when they're new to the game.

Is This Legal and Ethical?

This is the big one, and for good reason. Generally speaking, scraping publicly available data is legal. The nuance, however, comes down to the specific website’s Terms of Service and the type of data you're after.

Being an ethical scraper really just means being a good digital citizen. I always tell people to focus on a few key things:

- Check the

robots.txtfile. Think of it as the website's welcome mat—it tells bots where they are and aren't allowed to go. - Don't hammer their servers. Sending too many requests at once can slow a site down for everyone. This is known as rate limiting, and good tools help manage this for you.

- Steer clear of personal data or anything that's clearly copyrighted.

While a solid no-code tool will have safeguards, the final responsibility is yours. Always be mindful of regulations like GDPR and respect each site's specific rules.

Can I Scrape Data from Pages That Need a Login?

Absolutely. This is one of the most powerful features of modern no-code tools, especially browser extensions like PandaExtract. The secret is that the tool piggybacks on your browser's active session.

It’s surprisingly simple. You just log into the website as you normally would. Once you’re in, the extension can see what you see, allowing it to scrape data from pages behind that login, like a private customer dashboard or a members-only forum. Just make sure you have the right to access and extract that information according to the site’s terms.

The real magic of a browser extension is how it handles these authenticated sessions. It uses your browser's existing cookies to navigate behind logins, so you don't have to mess with complicated setups. This makes it incredibly easy to scrape data from your own accounts or platforms you have permission to use.

This capability alone opens up a huge number of use cases for pulling data from your own private sources.

What Are the Limits of a No-Code Scraper?

No-code tools are fantastic, but they aren't the perfect solution for every single job. They can sometimes struggle with extremely complex websites that use a ton of custom JavaScript or have sophisticated anti-bot defenses like advanced CAPTCHAs.

If you're planning a massive, enterprise-level operation that needs millions of page requests and complex proxy management, you might find a custom-coded solution gives you more fine-tuned control. But honestly, for the vast majority of business tasks—market research, building lead lists, monitoring competitor pricing—a tool like PandaExtract has more than enough horsepower and flexibility, without any of the technical headaches.

What Happens When a Website Changes Its Layout?

Ah, the classic "scraper rot." This is when a website redesign breaks your data extractor. With a coded scraper, this usually means a frantic call to a developer to spend hours or even days debugging and rewriting the script.

This is where no-code tools really shine. Forget digging through code; you just fix it visually.

You simply open the tool on the new page layout, re-click the data elements you want (like the new location for the product name or price), and save the updated recipe. The whole fix can take just a few minutes. It turns what used to be a major project-killer into a minor tweak.

Ready to stop wondering and start answering your own questions with data? PandaExtract makes it happen without you ever touching a line of code. Download our free Chrome extension and see how easy it is to turn any website into your personal, structured database.

Article created using Outrank

Published on